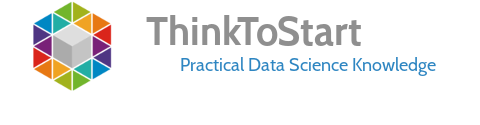

Human faces provide various information about emotions. Microsoft launched a free service in December 2015 to analyze human faces detecting their emotions. The emotions detected are anger, contempt, disgust, fear, happiness, neutral, sadness, and surprise. These emotions are understood to be cross-culturally and universally communicated with particular facial expressions.

The Emotion API takes an facial expression in an image as an input, and returns the confidence across a set of emotions for each face in the image, as well as bounding box for the face, using the Face API.

The implementation in R allows to analyze human faces in a structured way. Note, that one have to create an account to use the Face API.

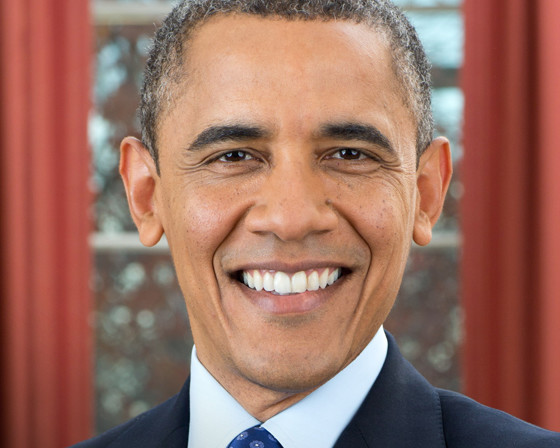

The example is referred to a simple example:

You need four R packages: httr, XML, stringr, ggplot2.

Analyze Face Emotions with R:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 |

##################################################################### # Load relevant packages library("httr") library("XML") library("stringr") library("ggplot2") # Define image source img.url = 'https://www.whitehouse.gov/sites/whitehouse.gov/files/images/first-family/44_barack_obama[1].jpg' # Define Microsoft API URL to request data URL.emoface = 'https://api.projectoxford.ai/emotion/v1.0/recognize' # Define access key (access key is available via: https://www.microsoft.com/cognitive-services/en-us/emotion-api) emotionKEY = 'XXXX' # Define image mybody = list(url = img.url) # Request data from Microsoft faceEMO = POST( url = URL.emoface, content_type('application/json'), add_headers(.headers = c('Ocp-Apim-Subscription-Key' = emotionKEY)), body = mybody, encode = 'json' ) # Show request results (if Status=200, request is okay) faceEMO # Reuqest results from face analysis Obama = httr::content(faceEMO)[[1]] Obama # Define results in data frame o<-as.data.frame(as.matrix(Obama$scores)) # Make some transformation o$V1 <- lapply(strsplit(as.character(o$V1 ), "e"), "[", 1) o$V1<-as.numeric(o$V1) colnames(o)[1] <- "Level" # Define names o$Emotion<- rownames(o) # Make plot ggplot(data=o, aes(x=Emotion, y=Level)) + geom_bar(stat="identity") ##################################################################### # Define image source img.url = 'https://www.whitehouse.gov/sites/whitehouse.gov/files/images/first-family/44_barack_obama[1].jpg' # Define Microsoft API URL to request data faceURL = "https://api.projectoxford.ai/face/v1.0/detect?returnFaceId=true&returnFaceLandmarks=true&returnFaceAttributes=age" # Define access key (access key is available via: https://www.microsoft.com/cognitive-services/en-us/face-api) faceKEY = 'XXXXX' # Define image mybody = list(url = img.url) # Request data from Microsoft faceResponse = POST( url = faceURL, content_type('application/json'), add_headers(.headers = c('Ocp-Apim-Subscription-Key' = faceKEY)), body = mybody, encode = 'json' ) # Show request results (if Status=200, request is okay) faceResponse # Reuqest results from face analysis ObamaR = httr::content(faceResponse)[[1]] # Define results in data frame OR<-as.data.frame(as.matrix(ObamaR$faceLandmarks)) # Make some transformation to data frame OR$V2 <- lapply(strsplit(as.character(OR$V1), "\\="), "[", 2) OR$V2 <- lapply(strsplit(as.character(OR$V2), "\\,"), "[", 1) colnames(OR)[2] <- "X" OR$X<-as.numeric(OR$X) OR$V3 <- lapply(strsplit(as.character(OR$V1), "\\y = "), "[", 2) OR$V3 <- lapply(strsplit(as.character(OR$V3), "\\)"), "[", 1) colnames(OR)[3] <- "Y" OR$Y<-as.numeric(OR$Y) OR$V1<-NULL |