Hello everybody,

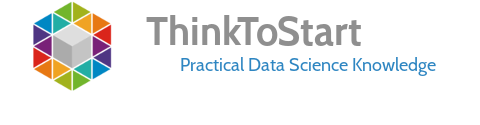

in my first tutorial I described how you can build your own MongoDB and use a JAVA program to mine Twitter either via the search function and a loop or via the Streaming API. But till now you just have your tweets stores in a Database and we couldn´t get any insight in our tweets now.

So we will take a look in this tutorial on how to connect to the MongoDB with R and analyze our tweets.

Start the MongoDB

To access the MongoDB I use the REST interface. This is the easiest way for accessing the database with R when just have started with it. If you are a more advanced user, you can also use the rmongodb package and the code provided by the user abhishek. You can find the code below.

So we have to start the MongoDB daemon. It is located in the folder “bin” and has the name “mongod”. So navigate to this folder and type in:

|

1 |

./mongod --rest |

This way we start the server and enable the access via the REST interface.

R

Let´s take a look at our R code and connect to the Database.

First we need the two packages RCurl and rjson. So type in:

|

1 2 |

library(RCurl) library(rjson) |

Normally the MongoDB server is running on the port 28017. So make sure that there is no firewall or other program blocking it.

So we have to define the path to the data base with:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

database = "tweetDB" collection = "Apple" limit = "100" db <- paste("http://localhost:28017/",database,"/",collection,"/?limit=",limit,sep = "") tweetDB - name of your database Apple - name of your collection limit=100 - number of tweets you want to get Ok now we can get our tweets with [code language="r"]tweets <- fromJSON(getURL(db)) |

And so you saved the Tweets the received. You can now analyze them like I explained in other tutorials about working with R and Twitter.

You can for example extract the text of your tweets and store it in a dataframe with:

|

1 2 3 4 |

tweet_df = data.frame(text=1:limit) for (i in 1:limit){ tweet_df$text[i] = tweets$rows[[i]]$tweet_text} tweet_df |

If you have any questions feel free to ask or follow me on Twitter to get the newest updates about analytics with R and analytics of Social Data.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

# install package to connect through monodb install.packages(“rmongodb”) library(rmongodb) # connect to MongoDB mongo = mongo.create(host = “localhost”) mongo.is.connected(mongo) mongo.get.databases(mongo) mongo.get.database.collections(mongo, db = “tweetDB2″) #”tweetDB” is where twitter data is stored library(plyr) ## create the empty data frame df1 = data.frame(stringsAsFactors = FALSE) ## create the namespace DBNS = “tweetDB2.#analytic” ## create the cursor we will iterate over, basically a select * in SQL cursor = mongo.find(mongo, DBNS) ## create the counter i = 1 ## iterate over the cursor while (mongo.cursor.next(cursor)) { # iterate and grab the next record tmp = mongo.bson.to.list(mongo.cursor.value(cursor)) # make it a dataframe tmp.df = as.data.frame(t(unlist(tmp)), stringsAsFactors = F) # bind to the master dataframe df1 = rbind.fill(df1, tmp.df) } dim(df1) |