Hey everybody,

this tutorial is about combining two great and powerful tools: R and Google BigQuery.

Big Query:

So but what is BigQuery? I think Google describes it very well on the developers page:“Querying massive datasets can be time consuming and expensive without the right hardware and infrastructure. Google BigQuery solves this problem by enabling super-fast, SQL-like queries against append-only tables, using the processing power of Google’s infrastructure. Simply move your data into BigQuery and let us handle the hard work. You can control access to both the project and your data based on your business needs, such as giving others the ability to view or query your data.” (source: https://developers.google.com/bigquery/what-is-bigquery)And BigQuery is fast. BigQuery is extremely fast but you will see that later when we query some sample data.

Getting Ready

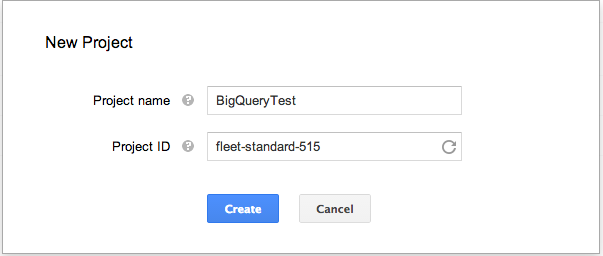

But before we can enjoy the speed we need to do some work. Maybe “work” is the wrong way as using BigQuery is as simple as possible. First we need to create a project for our test in the Google Developers Console

You can name it however you want to and so you can do with the ProjectID. It might shouldn´t be too complicated as we need it later. In the next step we have to activate the BigQuery API for our Project what we can do when we click on “APIs” on the left-hand site.

Let´s bring it to R

And the R part is as easy as the Google part because the awesome Hadley Wickham wrote a nice and easy package called “bigrquery“.

Start R and type in:

|

1 2 |

devtools::install_github("assertthat") devtools::install_github("bigrquery") |

|

1 2 3 4 5 6 7 |

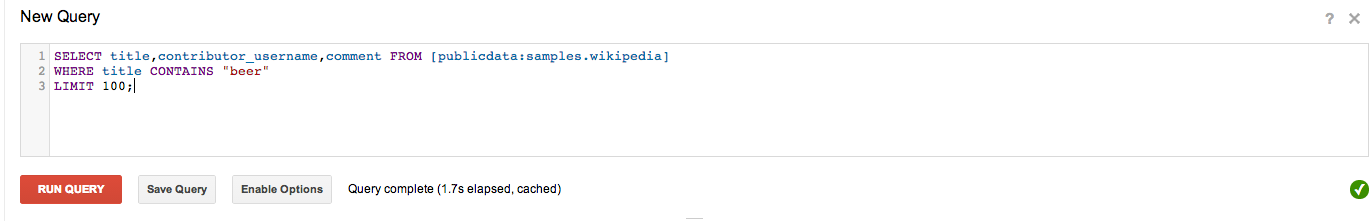

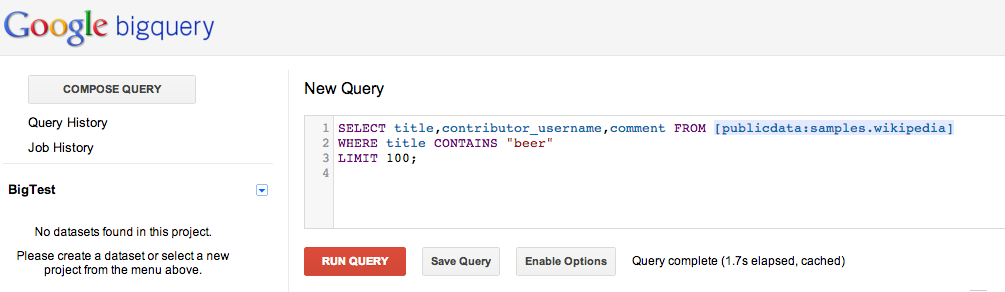

library(bigrquery) project <- "fleet-standard-515" # put your projectID here sql <- 'SELECT title,contributor_username,comment FROM[publicdata:samples.wikipedia] WHERE title CONTAINS "beer" LIMIT 100;' data <- query_exec("publicdata", "samples", sql, billing = project) |

First we set our ProjectID we chose when we created the project in the Google Developer Console.

In the next step we set our SQL query which we execute in the last row and save the result in the variable “data”.

In this case I query a sample dataset containing a list of wikipedia entries.

This dataset has 313,797,035 rows and a total size 35,7 GB! And you can query it in under 2 seconds!

This is a good example for showing the speed of BigQuery. We want the fields “title, contributor_username, comment” from every row in the dataset where the title contains “beer”. We then limit the output to 100 entries because otherwise in this example we would receive something about 360,00 entries with the word beer in the title (a huge amount).

A tab in browser will then open where you have to login into your Google account and give your R instance the permission to access your project. After the authentication you can see the result of your query in R.